AI in Cybersecurity: Where It Really Helps

AI in Cybersecurity: Where It Really Helps Today

Monday morning, 7:30 AM. The Chief Information Security Officer's inbox is filling up with alert notifications, new security alerts are pouring in, and an announced audit is casting its shadow ahead. In this everyday high-stress situation, one central question emerges: Where does Artificial Intelligence (AI) truly deliver value today, without falling into wishful thinking?

A recent study by Microsoft Research from July 2025, analyzing over 200,000 Copilot dialogues from January to September 2024, provides solid evidence. The researchers mapped real work tasks to specific job profiles and measured for each activity whether an actually usable result was produced. From this, they calculated an "AI-Applicability Score" for each profession. The key finding: AI offers measurable utility, but does not completely replace roles.

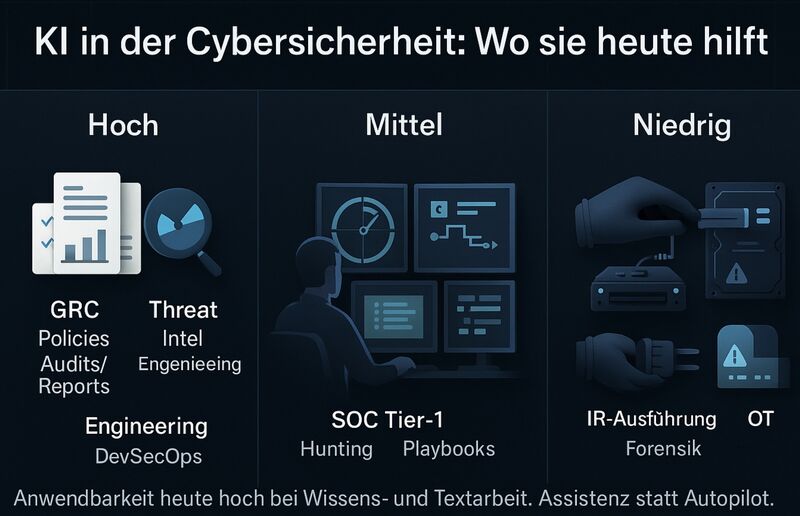

With this evidence-based perspective, we can practically assess the application of AI in cybersecurity. This article provides a structured overview of which areas AI already substantially supports today and where human expertise remains indispensable.

High AI Value: GRC, Threat Intelligence, and Security Engineering

Governance, Risk, and Compliance (GRC)

In the area of Governance, Risk, and Compliance, AI already unfolds its full potential today. Typical application scenarios include:

- Policy creation and documentation: AI can create initial drafts of security policies based on industry-standard frameworks

- Audit preparation: Automated compilation of evidence and documentation for compliance requirements

- Vendor questionnaires: Consistent answering of recurring questions about security measures

- Board reports: Condensing technical details into understandable management reports

The strength lies in information condensation, language standardization, and translating technical matters into plain language. AI provides a solid foundation, but the final decision and responsibility remain with humans. You review, supplement, and ultimately decide what stays.

Threat Intelligence and Situational Awareness

In the operational day-to-day of threat analysis, AI tremendously helps with managing information overload:

- Source evaluation: Automated reviewing of different threat intelligence feeds

- Duplicate detection: Identifying redundant alerts from various sources

- Core information extraction: Filtering out relevant indicators and relationships

- Prioritization: Assessing relevance for your own infrastructure

AI summarizes, marks priorities, and keeps knowledge objects current. Human analysts fill critical gaps, provide organization-specific context, and validate conclusions.

Security Engineering and DevSecOps

For security engineers and DevSecOps teams, AI offers valuable acceleration in development:

- Code skeletons: Basic frameworks for security tools and automations

- Configuration examples: Suggestions for secure baseline configurations

- Infrastructure-as-Code (IaC): Template creation for secure cloud resources

- Detection rules: Drafts for KQL queries, regex patterns, or YARA rules

Important: AI delivers starting points and skeletons, not production-ready solutions. Every AI-generated code or rule requires expert review. The role of AI is clearly defined here as assistance, not autopilot.

Medium AI Value: SOC Operations and Playbook Development

Security Operations Center (SOC) Tier-1

In the area of first-line alert handling, AI shows mixed results:

Benefits:

- Normalization of different alert formats into unified structures

- Generation of candidate lists for next investigation steps

- Formulation of clear decision trees for standard scenarios

- Support in documenting incident progressions

Challenges:

- Context gaps: AI doesn't know all environment specifics

- Hallucinations: Invented details that sound plausible but are incorrect

- Missing empirical values: Subtle patterns only experienced analysts recognize

Practical Countermeasures

To minimize risks, the following measures have proven effective:

- Input templates: Structured prompts that capture relevant minimal metadata

- Context enrichment: Providing environment information to the AI

- Review gates: Mandatory human verification before implementing AI suggestions

- Feedback loops: Systematic learning from misassessments

Humans remain the deciding factor: They connect environment knowledge, verify plausibility, and make final decisions.

Low AI Value: Critical Operations and Physical Security

Incident Response and Forensics

In executing critical security measures, AI encounters clear limitations:

- Incident response execution: Direct intervention in running systems requires precision

- Forensic imaging: Evidence-grade data preservation tolerates no errors

- Privileged actions: Changes to core systems require clear accountability

- OT interventions: Operational Technology tolerates no experiments

- Hardware-level activities: Physical access and specific tools required

Why AI Falls Short Here

The reasons are manifold:

- Physical access: AI cannot directly interact with hardware

- Tool precision: Forensic tools require exact parameters and documentation

- Formal approvals: Regulatory and legal requirements for traceability

- Low error tolerance: One mistake can destroy evidence or paralyze production systems

- Experiential knowledge: Years of practice cannot be simulated through training

In these areas, established procedures, checklists, and documented approval processes dominate. At best, AI observes from the sidelines but does not sit at the control panel of critical operations.

Best Practices for AI Use in Cybersecurity

1. Set Realistic Expectations

AI is a productivity multiplier, not a replacement for expertise. Deploy AI where it supports repetitive, document-heavy, or summarizing activities.

2. Define Clear Responsibilities

Establish who reviews, approves, and takes responsibility for AI-generated content. Accountability always remains with humans.

3. Respect Data Privacy and Compliance

Verify which data you may provide to AI systems. Especially with cloud-based solutions, data protection regulations must be considered.

4. Establish Continuous Learning

Document successes and failures in AI deployment. Share insights within the team and continuously adapt prompts and processes.

5. Combine AI with Human Expertise

The best results emerge through human-AI collaboration: AI delivers speed and scale, humans contribute context, judgment, and accountability.

Conclusion: AI as a Tool, Not a Replacement

Research and practical experience show a clear picture: AI unfolds its greatest value in cybersecurity where it functions as intelligent assistance, not as an autonomous actor.

Critical steps remain human-led, with clear approval processes and complete documentation. The art lies in deploying AI where it brings genuine time savings and quality gains without compromising security or compliance.

For CISOs and security teams, this means: Experiment pragmatically, measure successes concretely, and maintain control over critical decisions. AI is here to stay as a valuable tool in the arsenal of modern cybersecurity.

How are you using AI in your security environment? Share your experiences and discuss practical approaches with experts for greater efficiency while maintaining high security standards.