AI Governance: Why Process Beats Brilliance

The Most Dangerous Assumption About Artificial Intelligence

When we discuss Artificial Intelligence, debates usually revolve around two extremes: either AI is dismissed as primitive technology or elevated as a brilliant superintelligence. But the most dangerous misconception is not that AI is dumb, but the notion that AI is brilliant.

Why? Because this assumption leads to false expectations, uncontrolled processes, and ultimately to governance gaps that can become critical, especially for mid-sized businesses.

The Erdős Problem: A Case Study in Productive AI Usage

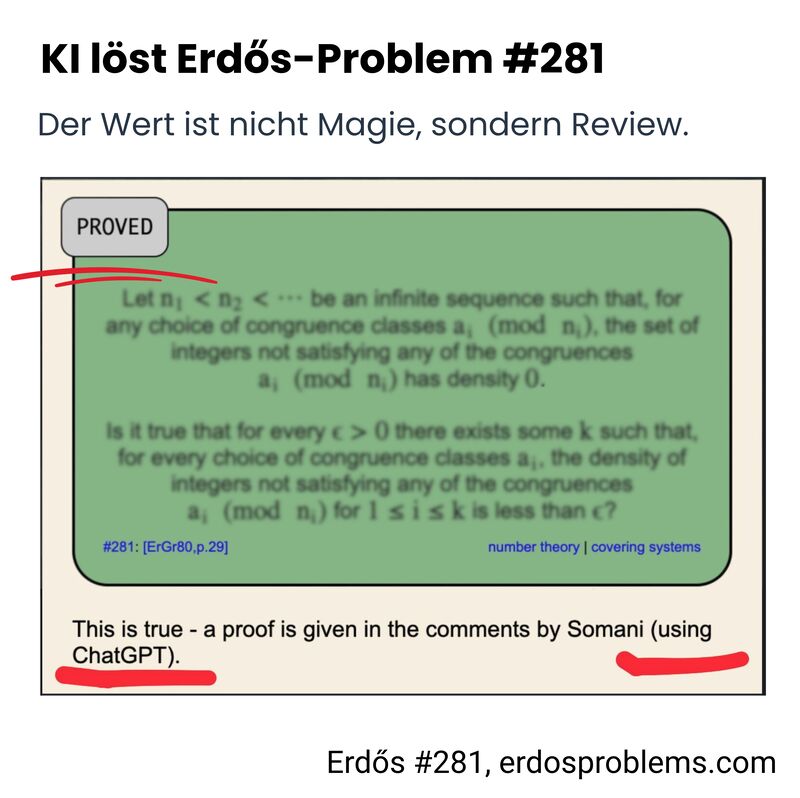

A recent example shows what this is really about: Neel Somani entered an unsolved mathematical problem from the Erdős collection into ChatGPT and let the model work for 15 minutes. The result was a complete solution path, which was subsequently formalized and verified using the tool Harmonic.

Harmonic is a tool that transforms natural language proofs into formally verifiable proofs. The proof held up. On the official Erdős Problems website, Problem #281 is now marked as PROVED.

What Does This Really Mean?

At first glance, this sounds like magic. An AI model solves a decades-old mathematical problem. But in the comments on the publication, the crucial point emerges: there is also an alternative proof based on classical mathematical results, including work by Davenport, Erdős, and Rogers.

This is not disenchantment. This is the core of the matter: AI rarely invents from nothing. It finds, structures, combines, and reformulates existing knowledge. And that is precisely where its productive value for knowledge work lies.

AI as a Knowledge Compression Tool, Not a Genius

What this example demonstrates is not the genius of a machine, but the ability to compress knowledge when the process is right. The AI:

- Searched through existing mathematical structures

- Identified relevant approaches

- Built a coherent chain of reasoning

- Delivered the result in verifiable form

The critical difference from uncontrolled AI usage: The process was verifiable. Through formalization with Harmonic, a plausible-sounding text became a provable proof.

The Five Pillars of Productive AI Governance

For mid-sized companies wanting to use AI productively and securely, this results in a clear governance framework:

1. Source Attribution: Every Claim Needs a Reference

AI-generated content must be traceable. Where does the information come from? What data supports the statement? Without source attribution, AI output is nothing more than a plausible-sounding claim.

Practical tip: Implement a rule in your AI workflows that every AI result used must be accompanied by source references before it enters decision-making processes.

2. Chain of Reasoning: Make Steps Transparent

AI models tend to present results without making the path transparent. For compliance-relevant decisions, this is unacceptable.

Require from your teams:

- Step-by-step documentation of AI-supported analyses

- Explicit justifications instead of implicit plausibility

- Traceable logic instead of "black box" results

3. Verification: Actively Test Variants and Edge Cases

An AI result may sound convincing. But does it also withstand counterexamples? Does it work in edge cases?

Practical implementation:

- Test AI suggestions with extreme input values

- Check alternative scenarios

- Challenge the model with counterarguments

In the Erdős example, this was exactly the case: the proof was not just accepted, but compared with alternative approaches.

4. Review & Ownership: Four-Eyes Principle Plus Clear Accountability

AI does not replace human responsibility. Every AI-supported result needs:

- A person who reviews the result

- A person who takes responsibility for it

- A documented review note

The classic four-eyes principle also applies to AI workflows, especially in regulated areas such as finance, healthcare, or IT security.

5. Documentation: Audit-Ready Records

Especially for mid-sized businesses with strict compliance requirements, documentation is crucial:

- What result was achieved?

- Which sources were used?

- Who reviewed and approved?

- When and in what context?

These audit trails are not optional; they are the foundation for provable, legally compliant AI usage.

Where Do You Need Strict Audit Trails, Where Is Pragmatism Enough?

Not every AI application requires the same governance effort. The question is: where are the critical points?

Strict audit trails are mandatory for:

- Compliance-relevant decisions

- Financial transactions

- Legally binding documents

- Safety-critical systems

- Personal data (GDPR)

Pragmatic four-eyes principle is sufficient for:

- Internal research

- Drafts and brainstorming

- Non-critical text work

- Exploratory analyses

The risk class determines the degree of governance. A one-size-fits-all approach is neither practical nor sensible.

AI as a Turbocharger for Knowledge Work: The Reality Check

Back to the initial question: is AI brilliant or dumb? Neither. AI is a tool for knowledge compression that becomes productive when you force it into a verifiable process.

The Erdős example shows: the combination of AI-supported exploration and formal verification leads to robust results. It was not the supposed genius of the AI that was decisive, but the structured process around it.

Implementing Governance in Your Organization

For practical implementation in mid-sized businesses, consider these concrete steps:

Start with risk assessment: Categorize your AI use cases by risk level. Financial forecasting requires different controls than content drafting.

Define clear workflows: Document exactly how AI outputs should be reviewed, who is responsible, and what documentation is required.

Train your teams: Ensure everyone understands that AI is a tool, not an authority. Critical thinking remains essential.

Build technical safeguards: Implement systems that enforce source attribution, version control, and review processes.

Regular audits: Review your AI usage patterns periodically. Are processes being followed? Are results reliable?

The Cultural Shift: From AI Worship to AI Mastery

Perhaps the most important insight is cultural. Organizations that succeed with AI don't treat it as either a miraculous solution or a dangerous threat. They treat it as what it is: a powerful tool that requires discipline and structure.

This means:

- Demystifying AI within your organization

- Empowering people to use AI confidently but critically

- Building systems that ensure accountability

- Creating transparency in how AI influences decisions

The Erdős problem solution is impressive not because AI is brilliant, but because someone created a process that made AI's capabilities verifiable and useful.

Conclusion: Process Creates Value in Regular Operations

For mid-sized businesses, this means:

- Use AI as a productivity tool, not as an oracle

- Implement clear processes for AI-supported knowledge work

- Create traceability through documentation and review

- Scale governance according to risk class

- Remain accountable for what AI produces

This creates impact in regular operations without collapsing risk and traceability. AI does not become valuable because it is brilliant, but because it is embedded in an intelligent process.

The critical question for your organization is: Where do you need strict audit trails for AI usage, and where is a pragmatic four-eyes principle sufficient? The answer determines how productive and secure your AI strategy will be.

The most dangerous AI lie is not that AI is dumb, but that AI is brilliant. The truth is more useful: AI is a tool that becomes powerful through good governance. Master the process, and you master the technology.